Numbers Never Speak for Themselves

from the PIT UNiverse Newsletter

Civic Engagement

April, 2024

Hearing the diagnosis was frightening, debilitating.

Yet there was an undeniable sense of relief,

for finally she was able to connect symptoms and feelings with explanations.

How does one’s body cope with so many contradictions at once?

With time, that which had frightened came to empower her.

She regained some control.

The turbulence settled. The treatments began. Her lifestyle adjusted.

A newfound hope and strength arrived.

What is Public Interest Technology?

5 Keys to Institutionalizing PIT

What is PIT-UN?

Many of us can relate to a story of a health scare like this, either through a personal experience or that of a loved one. And if the human body is a metaphor for society, with all its beauty and its ailments, would we not want to dig beneath the surface of our collective symptoms - reflected in our data - to move towards a healthier shared future?

Social data – in numbers, in words - are meant to reflect the functioning of society. But what lies underneath the data? Who is represented in the data, and who is not? What are the social processes and interactions that generate such data?

We have taken big steps in capturing fine grain data from the human experience. We have developed data tools to process, link, visualize, predict, and draw inference from data. But the interpretations, predictions and inferences we make depend not only on the data themselves, but on the social processes that generate data, and the assumptions with which we contextualize the data.

If data science is to serve the public good, we must continually ask difficult questions about data, data provenance, and metadata, questions like:

Who is carrying the burden of disease but does not appear with a diagnosis in health records, and why?

How are people classified into categories of racial, ethnic and gender categories, and why?

Who is more likely to appear in administrative records documenting encounters with police, and why?

History provides a cautionary tale for those of us who seek to use data to advance social welfare. The history of social sciences and social data is plagued by misuse, abuse, and flawed logic: “[This] is not an easy book. Nor should it be”, writes Khalil Gibran Muhammad in The Condemnation of Blackness, a history of racism in early social sciences and crime statistics. We learn of how census and prison data fields were conceived in the early 1900s to organize people into more and less deserving groups, and how prominent social scientists and statisticians paired these data with flawed logic and assumptions to make causal claims portraying Black people as criminals.

We also learn of the courageous response of Black scholars and activists like mathematician Kelly Miller, sociologist W. E. B. DuBois, and journalist Ida B. Wells, whose carefully crafted counterarguments hinged on data, logic, and experiential knowledge.

“For good or for bad, the numbers do not speak for themselves,” Dr. Muhammad writes in his conclusion. “The invisible layers of racial ideology packed into the statistics, sociological theories, and the everyday stories we continue to tell about crime in modern urban America are a legacy of the past. The choice about which narratives we attach to the data in the future, however, is ours to make.”

It was not until 2020, during widespread protests against institutional racism, that academic associations acknowledged the role of data technologies in creating and sustaining systems of racial oppression. The threat of misusing data technologies to perpetuate or even strengthen discrimination is real – as is the potential they offer to advance social justice.

Subscribe to the PIT UNiverse Newsletter

A New Framework for Vetting Data Practices

For data practices to realize their potential of advancing social justice, we need new frameworks that integrate feedback from the communities represented in the data, and that account for the historical processes and biases that shape the data.

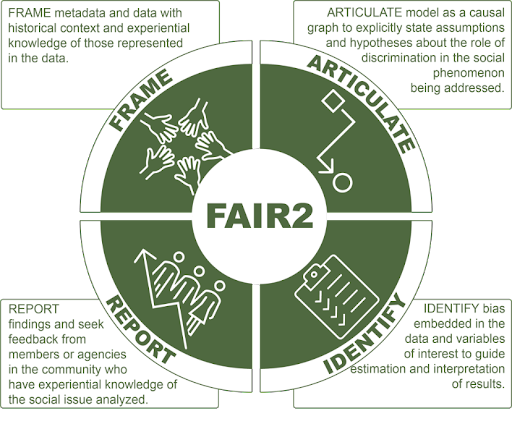

At Case Western Reserve University, with support from a PIT-UN Network Challenge grant we developed the FAIR2 (Frame – Articulate – Identify – Report), framework to integrate community knowledge and address discrimination bias in data science. Inspired by the long history of pain and courage in the social sciences, and recent literature from a range of public interest fields that use social data, FAIR2 complements established ethical standards of FAIRification (Findable, Accessible, Interoperable and Reusable) by adding four additional principles specific to working with social data for societal impact:

Frame metadata and data with historical context and experiential knowledge of those represented in the data. Data chats with those whose experiences are represented in the data, as well as qualitative literature and history are key tools in the framing process.

Articulate assumptions drawn from the framing stage into models represented with causal graphs -Directed Acyclic Graphs (DAGs) where we can explicitly lay out our hypotheses about the role of discrimination in the social problem we are studying.

Follow a set of guidelines proposed by researchers to Identify bias embedded in the data and variables of interest, aiming to minimize bias and report on limitations due to bias.

Report findings to and seek feedback from members or agencies in the community who have experiential knowledge of the social issue analyzed.

Take Action

In collaboration with community partners, we piloted FAIR2 with two case studies related to meta data around nutrition assistance and homelessness services. Detailed findings from the nutritional assistance study challenge the notion that data is inherently reliable, and show a clear pathway to guiding data analytics towards the public interest.

Our team developed FAIR2 with support from a PIT-UN grant, as a tool to use in the classroom with students pursuing the Data Science for Social Impact (DSSI) certificate at Case Western Reserve University. But its call to Frame, Articulate, Identify and Report is extending beyond the classroom and into our research.

FAIR2 promises to grow, mature, and nurture our ability to integrate experiential knowledge into data analytics. We invite you to try it out, and get in touch with any questions or ideas you have about how to continue improving the methodology: fxr58 [at] case.edu.

Integrating the history and experiential knowledge of the people represented in the data allows us to dig deeper into the assets and the ailments of our society. As when going through our own personal ailments, uncovering this deeper knowledge can be frightening, yet necessary and empowering to moving forward together with hope and strength towards a society that supports all people to realize their full potential.

Francisca García-Cobián Richter is a Research Associate Professor at the Jack, Joseph and Morton Mandel School of Applied Social Sciences, Case Western Reserve University. Her recent work includes estimating the effects of housing and neighborhood quality on children’s academic outcomes, evaluating a pay-for-success intervention targeted to families in the child welfare system facing housing instability, and assessing the economic cost of childhood exposure to domestic violence.

Related Posts

Data Science for Climate Change Sewanee DataLab Students & Faculty Partner with Communities Climate …

Join the livestream on June 6 to hear from leading public interest technologists on the role of data in securing human rights and dignity.

Through a data literacy program at the GSU library, students work with community partners on public interest issues like food security.