Making Data Science Democratic

Defending Democracy

November, 2023

Author: Dr. Amy Yeboah Quarkume, affectionately known as Dr. A, is a daughter of Africa, a scholar, filmmaker, data scientist, and Associate Professor of Africana Studies in the Department of Afro-American Studies at Howard University. Her work as a data scientist centers around AI Bias, data inequality, and environmental justice, and she directs Howard’s Master’s Program in Applied Data Science and CORE Futures Lab.

What is Public Interest Technology?

5 Keys to Institutionalizing PIT

What is PIT-UN?

Gaps in Data, Gaps in Democracy

There is the democracy we think we live in, and then there is the democracy we are actually living in.

In the democracy we think we live in, individuals and communities have the power to shape their own futures, to define their own interests and act on them. In this scenario, the government is a supporter and protector of the public interest, providing infrastructure, education, public safety, clean water, and other basic needs. Data — how it is collected, managed, and deployed in public services — plays an increasingly important role in the public interest.

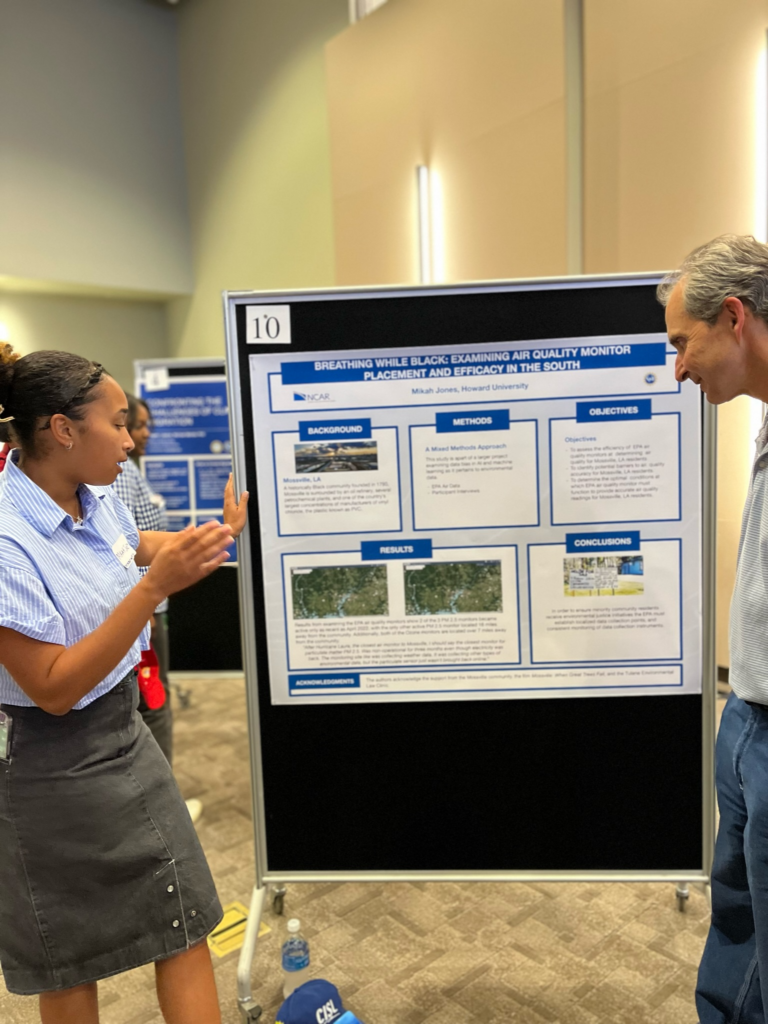

In the democracy we are actually living in, the government often fails to protect the public interest because of undemocratic data practices. Environmental protection is an area where this failure is evident, particularly for Black, Brown, and Native American communities. The historic Black town of Mossville, Louisiana is just one example. While the Environmental Protection Agency (EPA) declares Mossville’s air quality to be “good,” resident stories and on-the-ground reporting paint a very different picture: “a breeding ground for petrochemical plants and their toxic black clouds.”What explains this discrepancy?

Despite the fact that data steer much of the decision-making within governmental agencies, data collection and management often follow the same trend lines of racial and class inequality we see in housing, education, and health care. It’s clear that not all data points are weighed equally. In our highly technological, data-driven society, this discrepancy holds profound implications for the well-being and life of every individual — and for democracy itself.

Data, often perceived as an objective reflection of reality in its purest form, is inevitably shaped by the subjective human elements that influence its collection, interpretation, and application. In a democratic context, it’s imperative for data to mirror the multifaceted dimensions of society, capturing the intricacies of diverse demographics. As an interdisciplinary scholar of Africana studies, data science, and environmental justice, I see data science as a collaborative practice of inquiry and research with students and communities. Through education and practical engagement, I aim to empower them to collect, critically analyze, and ethically deploy data in ways that uphold democratic ideals for all communities.

Guiding Principles of the CORE Futures Lab

At the CORE Futures Lab, which I direct at Howard University, we practice four key principles: community-centered, openness, research, and equity. Contrary to the widespread belief that data alone holds the answers to society’s challenges, we emphasize how growing gaps in government data and the lack of democratic control over our data compound existing issues and inequalities. While we acknowledge the abundance of available data, we are also mindful of the problems that arise from the singular white, male, and cisgender perspective that dominates the tech industry and the field of data science. To create comprehensive solutions, we empower underrepresented communities and foster collaboration among diverse voices. Our vision is to discover innovative approaches that address both known and unknown challenges, unveiling previously unseen possibilities.

The lab is intergenerational by design. I believe students and young scholars, not just professors, must be involved in the collection of data and creation of new knowledge. Within data science, the norm is that data from the past — rife with historical biases we do not want to replicate — is used to predict the future. Not involving young people in the pursuit of data science is highly problematic. After all, it’s their future.

In the CORE Futures Lab, we bring together middle and high school students, undergraduates, graduate students, and community partners to tap into the power of data. Students learn how to center community data and run community-centered projects. They get to experience contributing to a research team that looks like them, and they get to present their work as authors to the scientific community. I often hear from these students a key realization: “I don’t have to wait to get to college or graduate school to work with data in a meaningful way.”

Our research process, guided by Greg Carr’s Africana Studies framing question, starts with learning about the history of the community we are engaging with: Who are the people being studied? Where did they come from, and how did they come to the experience being studied? How do people view themselves, their origin, and their world in any given time and place?

Then we define our methods: How will we co-capture, co-collect, and co-create data, and how will we share the data on agreed-upon terms, in a manner that is beneficial for the community? Validation of the data doesn’t come through our degrees or institutions or even through publication; it comes from the community we work with.

Then we assess if the data reflects the community. If not, we start the research process again. Linda Tuhiwai Smith describes in Decolonizing Methodologies: Research and Indigenous Peoples how research has historically been a dirty word in Black, Brown and Native American communities. It is incumbent upon us researchers to begin to mend the relationship.

Share via

Community-Centered Water, Air & Heat Mapping

What happens when your local news station, state Department of Environmental Quality, or the federal EPA can’t disclose or provide data to explain the strangely colored skyline and funny odor you see and smell in the morning — not because they don’t want to, but because they don’t have data that is specific to your community?

Advanced AI scientists Daphne Koller, Olga Russakovsky, and Timnit Gebru, in a 2019 New York Times article, described data, algorithmic bias, lack of ethical standards, and cultural consciousness training as four issues of concern within data management and AI. I applied these four issues to frame the primary objectives in my research project “Data Pollution and Savage Algorithms,” supported by the National Center for Atmospheric Research’s Innovator Program. This two-year research study illuminates the dark side of AI and data science in the field of earth science and sheds light on how marginalized Black, Brown, and tribal communities are disenfranchised by the very technology that promises to enhance our world.

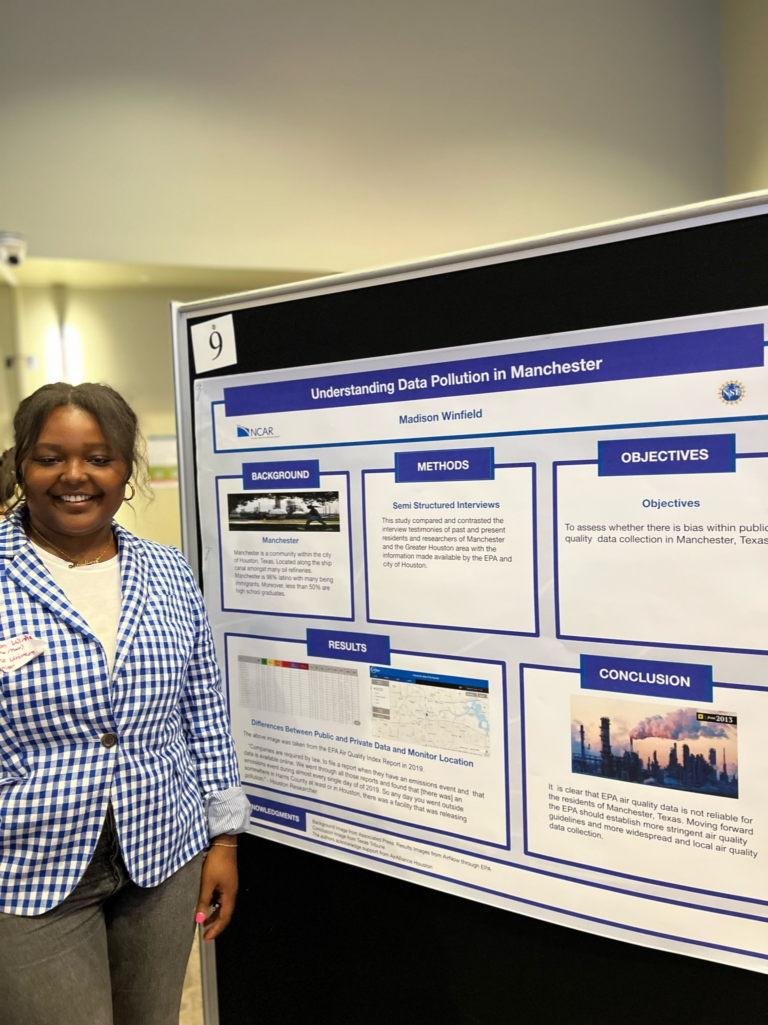

The project focused on six communities of color across the U.S. facing environmental health crises, each with their own labels of notoriety: “Climate Gentrification” in Little Haiti (Miami); the “Open Sewer” of the Bronx River in New York City; the “Asthma Capital” of Maryland and the District of Columbia; Houston’s “PetroMetro”; and Louisiana’s “Cancer Alley.”

Despite these communities facing life-threatening living conditions, including lack of clean water and air and extreme heat, official government reports often classify living conditions there as “good.” Yet, the research shows that communities of African, Hispanic, and Native American descent face heightened exposure to air pollution compared to their white counterparts, bearing the burden of environmental racism akin to modern-day Jim Crow. “Data Pollution and Savage Algorithms” discloses how data pollution and systemic racism in earth science data undermine the very communities the U.S. Environmental Protection Agency is meant to protect.

In the first phase of the project, we surveyed eight communities of color across the U.S. on water, air, and heat issues by mailing air quality filters and thermometers to households and then comparing their responses to EPA reporting. Our findings confirmed that the EPA’s monitoring and reporting systems mirror the racial and class inequalities evident in housing and other areas.

To take just one example, in our research with Little Haiti it became evident that the nearest EPA heat monitor is at the Miami airport, miles away from the community, resulting in EPA reporting that far underrated the heat index. Without accurate data on living conditions in communities like Little Haiti, how can the EPA possibly deliver on its mission, which says the EPA “protects people and the environment from significant health risks … and develops and enforces environmental regulations”?

In the second phase of research, we focused on developing literacy by helping the communities articulate the results and make meaning of their situation. We asked fundamental questions about the problem, such as: “What does water mean to you? Why is it important to your life?” followed by evaluative questions such as: “What’s wrong with the water you have access to?” and finally, articulating next steps: How should the water be cleaned? Who should be involved?

In its third phase the project, Breathable Futures, is shifting toward helping the community create a data ethics policy for our team to deploy water, air, and temperature monitors in the community. We will use a low-cost, modular, extensible, and pint-sized 3D-printed weather station based on Internet of Things technology. Keith Maull and Agbeli Ameko, NCAR scientist developers of openIoTwx, constructed it based on open source, FAIR, and CARE principles. The platform is tailored to fit community-specific needs and is committed to community-maintained data sovereignty and control.

CORE Futures lab students presenting our work at a conference.

Real Democracy Requires Democratic Data

Data is the bedrock for making informed decisions, shaping policies, and allocating resources fairly and equitably. Without democratic data, members of the Bronx River Alliance struggle to make healthy decisions to support their families. Without knowing what is happening in the water, the community lacks the power of data to envision a better future.

Access to accurate and inclusive data is vital to address systemic inequalities, enabling our communities to advocate for fair representation and just treatment. A data framework rooted in democracy empowers community members by granting them the opportunity to confront entrenched biases of the past and ongoing inequalities, creating pathways towards the democratic promise of government that is, truly, by the people and for the people.